WON’T INNOVATION,

SUBSTITUTION,

AND EFFICIENCY

KEEP US GROWING?

I want to believe in innovation and its possibilities, but I am more thoroughly convinced of entropy. Most of what we do merely creates local upticks in organization in an overall downward sloping curve. In that regard, technology is a bag of tricks that allows us to slow and even reverse the trend, sometimes globally, sometimes only locally, but always only temporarily and at increasing aggregate energy cost.

— Paul Kedrosky (entrepreneur, editor of the econoblog Infectious Greed)

In the course of researching and writing this book, I discussed its central thesis — that world economic growth has come to an end — with several economists, various businesspeople, a former hedge fund manager, a topflight business consultant, and the former managing director of one of Wall Street’s largest investment banks, as well as several ecologists and environmental activists. The most common reaction (heard as often from the environmentalists as the bankers) was along the lines of: “But capitalism has a few more tricks up its sleeve. It’s infinitely creative. Even if we’ve hit environmental limits to energy or water, the mega-rich will find ways to amass yet more capital on the way down the depletion slope. It’ll still look like growth to them.”

Most economists would probably agree with the view that environmental constraints and a crisis in the financial world don’t add up to the end of growth — just a speed bump in the highway of progress. That’s because smart people will always be thinking of new technologies and of new ways to do more with less. And these will in turn be the basis of new commercial products and business models.

Talk of limits typically elicits dismissive references to the failed warnings of Thomas Malthus — the 18th-century economist who reasoned that population growth would inevitably (and soon) outpace food production, leading to a general famine. Malthus was obviously wrong, at least in the short run: food production expanded throughout the 19th and 20th centuries to feed a fast-growing population. He failed to foresee the introduction of new hybrid crop varieties, chemical fertilizers, and the development of industrial farm machinery. The implication, whenever Malthus’s ghost is summoned, is that all claims that environmental limits will overtake growth are likewise wrong, and for similar reasons. New inventions and greater efficiency will always trump looming limit.1

In this chapter, we will examine the factors of efficiency, substitution, and innovation critically and see why — while these are key to our efforts to adapt to resource limits — they are incapable of removing those limits, and are themselves subject to the law of diminishing returns. And returns on investments in these strategies are in many instances already quickly diminishing.

Substitutes Forever

It is often said that “the Stone Age didn’t end for lack of stones, and the oil age won’t end for lack of oil; rather, it will end when we find a cheaper, better source of energy.” Variations on that maxim have appeared in ads from ExxonMobil, statements from the Saudi Arabian government, and blogs from pro-growth think tanks — all arguing that the world faces no energy shortages, only energy opportunities.

It’s true: the Stone Age ended when our ancient ancestors invented metal tools and found them to be superior to stone tools for certain purposes, not because rocks became scarce. Similarly, in the late-19th century early industrial economies shifted from using whale oil for lubrication and lamp fuel to petroleum, or “rock oil.” Whale oil was getting expensive because whales were being hunted to the point that their numbers were dropping precipitously. Petroleum proved not only cheaper and more abundant, it also turned out to have a greater variety of uses. It was a superior substitute for whale oil in almost every respect.

Fast forward to the early 21st century. Now the cheap rock oil is gone. It’s time for the next substitute to appear — a magic elixir that will make nasty old petroleum look as obsolete and impractical as whale oil. But what exactly is this “new oil”?

Economic theory is adamant on the point: as a resource becomes scarce, its price will rise until some other resource that can serve the same need becomes cheaper by comparison. That the replacement will prove superior is not required by theory.

Well, there certainly are substitutes for oil, but it’s difficult to see any of them as superior — or even equivalent — from a practical, economic point of view.2

Just a few years ago, ethanol made from corn was hailed as the answer to our dependence on depleting, climate-changing petroleum. Massive amounts of private and public investment capital were steered toward the ethanol industry. Government mandates to blend ethanol into gasoline further supported the industry’s development. But that experiment hasn’t turned out well. The corn ethanol industry went through a classic boom-and-bust cycle, and expanding production of the fuel hit barriers that were foreseeable from the very beginning. It takes an enormous land area to produce substantial amounts of ethanol, and this reduces the amount of cropland available for growing food; it increases soil erosion and fertilizer pollution while forcing food prices higher. By 2008, soil scientists and food system analysts were united in opposing further ethanol expansion.3

For the market, ethanol proved too expensive to compete with gasoline. But from an energy point of view the biggest problem with corn ethanol was that the amount of energy required to grow the crop, harvest and collect it, and distill it into nearly pure alcohol was perilously close to the amount of energy that the fuel itself would yield when burned in an engine. This meant that ethanol wasn’t really much of an energy source at all; making it was just a way of taking existing fuels (petroleum and natural gas) and using them (in the forms of tractor fuel, fertilizer, and fuel for distillation plants) to produce a different fuel that could be used for the same purposes as gasoline. Experts argued back and forth: one critic said the energy balance of corn ethanol was actually negative (less than 1:1) — meaning that ethanol was a losing proposition on a net energy basis.4 But then a USDA study claimed a positive energy balance of 1.34:1.5 Other studies yielded slightly varying numbers (the differences had to do with deciding which energy inputs should be included in the analysis).6 From a broader perspective, this bickering over decimal-place accuracy was pointless: in its heyday, oil had enjoyed an EROEI of 100:1 or more, and it is clear that for an industrial society to function it needs primary energy sources with a minimum EROEI of between 5:1 and 10:1.7 With an overall societal EROEI of 3:1, for example, roughly a third of all of that society’s effort would have to be devoted just to obtaining the energy with which to accomplish all the other things that a society must do (such as manufacture products, carry on trade, transport people and goods, provide education, engage in scientific research, and maintain basic infrastructure). Since even the most optimistic EROEI figure for corn ethanol is significantly below that figure, it is clear that this fuel cannot serve as a primary energy source for an industrial society like the United States.

The problem remains for so-called second- and third-generation bio-fuels — cellulosic ethanol made from forest and crop wastes and biodiesel squeezed from algae. Extraordinary preliminary claims are being made for the potential scalability and energy balance of these fuels, which so far are still in the experimental stages, but there is a basic reason for skepticism about such claims. With all biofuels we are trying to do something inherently very difficult — replace one fuel, which nature collected and concentrated, with another fuel whose manufacture requires substantial effort on our part to achieve the same result. Oil was produced over the course of tens of millions of years without need for any human work. Ancient sunlight energy was chemically gathered and stored by vast numbers of microscopic aquatic plants, which fell to the bottoms of seas and were buried under sediment and slowly transformed into energy-dense hydrocarbons. All we have had to do was drill down to the oil-bearing rock strata, where the oil itself was often under great pressure so that it flowed easily up to the surface. To make biofuels, we must engage in a variety of activities that require large energy expenditures for growing and fertilizing crops, gathering crops or crop residues, pressing algae to release oils, maintaining and cleaning algae bioreactors, or distilling alcohol to a high level of purity (this is only a partial list). Even with substantial technical advances in each of these areas, it will be impossible to compete with the high level of energy payback that oil enjoyed in its heyday.

This is not to say that biofuels have no future. As petroleum becomes more scarce and expensive we may find it essential to have modest quantities of alternative fuels available for certain purposes even if those alternatives are themselves expensive, in both monetary and energy terms. We will need operational emergency vehicles, agricultural machinery, and some aircraft, even if we have to subsidize them with energy we might ordinarily use for other purposes. In this case, biofuels will not serve as one of our society’s primary energy sources — the status that petroleum enjoys today. Indeed, they will not comprise much of an energy source at all in the true sense, but will merely serve as a means to transform energy that is already available into fuels that can be used in existing engines in order to accomplish selected essential goals. In other words, biofuels will substitute for oil on an emergency basis, but not in a systemic way.

The view that biofuels are unlikely to fully substitute for oil anytime soon is supported by a recent University of California (Davis) study that concludes, on the basis of market trends only, that “At the current pace of research and development, global oil will run out 90 years before replacement technologies are ready.”8

It could be objected that we are thinking of substitutes too narrowly. Why insist on maintaining current engine technology and simply switching the fuel? Why not use a different drive train altogether?

Electric cars have been around nearly as long as the automobile itself. Electricity could clearly serve as a substitute for petroleum — at least when it comes to ground transportation (aviation is another story — more on that in a moment). But the fact that electric vehicles have failed for so long to compete with gasoline- and diesel-powered vehicles suggests there may be problems.

In fact, electric cars have advantages as well as disadvantages when compared to fuel-burning cars. The main advantages of electrics are that their energy is used more efficiently (electric motors translate nearly all their energy into motive force, while internal combustion engines are much less efficient), they need less drive-train maintenance, and they are more environmentally benign (even if they’re running on coal-derived electricity, they usually entail lower carbon emissions due to their much higher energy efficiency). The drawbacks of electric vehicles are partly to do with the limited ability of batteries to store energy, as compared to conventional liquid fuels. A gallon of gasoline carries 46 megajoules of energy per kilogram, while lithium-ion batteries can store only 0.5 MJ/kg. Improvements are possible, but the ultimate practical limit of chemical energy storage is still only about 6–9 MJ/kg.9 This is why we’ll never see battery-powered airliners: the batteries would be way too heavy to allow planes to get off the ground. This doesn’t mean research into electric aircraft should not be pursued: There have been successful experiments with ultra-light solar-powered planes, and electric planes could come in handy in a future where most transport will be by boat, rail, bicycle, or foot. But these will be special-purpose aircraft that can carry only one or two passengers.

The low energy density (by weight) of batteries tends to limit the range of electric cars. This problem can be solved with hybrid power trains — using a gasoline engine to charge the batteries, as in the Chevy Volt, or to push the car directly part of the time, as with the Toyota Prius — but that adds complexity and expense.

So substituting batteries and electricity for petroleum works in some instances, but even in those cases it offers less utility (if it offered more utility, we would all already be driving electric cars).10

Increasingly, substitution is less economically efficient. But surely, in a pinch, can’t we just accept the less-efficient substitute? In emergency or niche applications, yes. But if the less-efficient substitute must replace a resource of profound economic importance (like oil), or if a large number of resources have to be replaced with less-useful substitutes, then the overall result for society is a reduction — perhaps a sharp reduction — in its capacity to achieve economic growth.

As we saw in Chapter 3, in our discussion of the global supply of minerals, when the quality of an ore drops the amount of energy required to extract the resource rises. All over the world mining companies are reporting declining ore quality.11 So in many if not most cases it is no longer possible to substitute a rare, depleting resource with a more abundant, cheaper resource; instead, the available substitutes are themselves already rare and depleting.

Theoretically, the substitution process can go on forever — as long as we have endless energy with which to obtain the minerals we need from ores of ever-declining quality. But to produce that energy we need more resources. Even if we are using only renewable energy, we need steel for wind turbines and coatings for photovoltaic panels. And to extract those resources we need still more energy, which requires more resources, which requires more energy. At every step down the ladder of resource quality, more energy is needed just to keep the resource extraction process going, and less energy is available to serve human needs (which presumably is the point of the exercise).12

The issues arising with materials synthesis are similar. In principle it is possible to synthesize oil from almost any organic material. We can make petroleum-like fuels from coal, natural gas, old tires, even garbage. However, doing so can be very costly, and the process can consume more energy than the resulting synthetic oil will deliver as a fuel, unless the material we start with is already similar to oil.

It’s not that substitution can never work. Recent years have seen the development of new catalysts in fuel cells to replace depleting, expensive platinum, and new ink-based materials for photovoltaic solar panels that use copper indium gallium diselenide (CIGS) and cadmium telluride to replace single-crystalline silicon. And of course renewable wind, solar, geothermal, and tidal energy sources are being developed and deployed as substitutes for coal.

We will be doing a lot of substituting as the resources we currently rely on deplete. In fact, materials substitution is becoming a primary focus of research and development in many industries. But in the most important cases (including oil), the substitutes will probably be inferior in terms of economic performance, and therefore will not support economic growth.

BOX 4.1 Substitution Time Lags and Economic Consequences

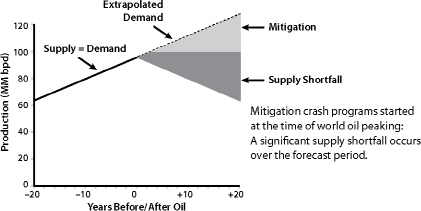

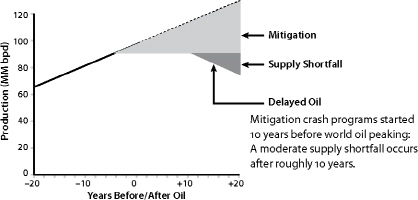

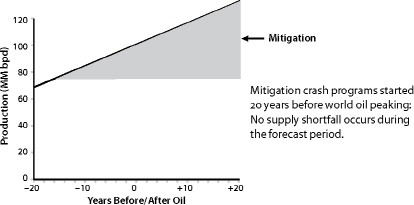

Assume that world oil production peaks this year and begins declining at a rate of two percent per year. We will then need to increase volumes of replacement fuels by this amount plus about 1.5 percent annually in order to fuel a modest rate of economic growth (that’s 3.5 percent total). We can theoretically achieve the same amount of growth by increasing transport energy efficiency by 3.5 percent per year, or by pursuing some combination of these two strategies, as long as the total effect is to adjust to declining oil availability while maintaining growth. We will probably not achieve either our substitution or efficiency goals during the first year (it takes time to develop new policies and technologies), so we will face an oil shortfall amounting to 3.5 percent of the total, minus whatever small increment we are able to offset with replacement fuels and efficiency on a short-term basis. The next year will see a similar situation. If, at the moment production started declining, we were smart enough to start investing heavily in substitutes and more efficient transport infrastructure (electric cars and trains), then those investments would start to pay off in three to four years, but it will take even longer — four to five years — for substitution and efficiency to offer significant help.13

During these five years, unless we have plans in place to handle fuel shortfalls, adaptation will not be orderly or painless. With a reduction of two percent in oil availability, we may experience a decrease in GDP of three or four percent. Investors will become cautious and job markets will contract. There is no way to know how markets will respond during this period of high insecurity about the future energy supply. The values of currencies, the stock market, bonds, and real estate are all tied to the belief that the economy will grow in the future. With three, four, or five years of recession or depression, belief in future economic growth could wane, causing markets to fall further. The value of many of these assets could fall very significantly. And of course in a recession it may be harder to allocate resources towards innovation.

This is why it is essential to begin investing in efficiency and alternative energy as soon as possible, and choose wisely with regard to those investments.14

FIGURE 38. The Cost of Delaying Prepatory Response to Peak Oil. Source: Robert L. Hirsch, Roger Bezdek, and Robert Wendling, 2005, “Peaking of World Oil Production: Impacts, Mitigation, & Risk Management.”

Energy Efficiency to the Rescue

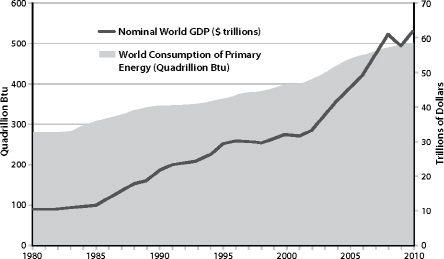

The historic correlation between economic growth and increased energy consumption is controversial, and I promised in Chapter 3 to return to the question of whether and to what degree it is possible to de-link or decouple the two.

While it is undisputed that, during the past two centuries, both energy use and GDP have grown dramatically, some analysts argue that the causative correlation between energy consumption and growth is not tight, and that energy consumption and economic growth can be decoupled by increasing the efficiency with which energy is used. That is, economic growth can be achieved while using less energy.15

This has already happened, at least to some degree. According to the US Energy Information Administration,

From the early 1950s to the early 1970s, US total primary energy consumption and real GDP increased at nearly the same annual rate. During that period, real oil prices remained virtually flat. In contrast, from the mid-1970s to 2008, the relationship between energy consumption and real GDP growth changed, with primary energy consumption growing at less than one-third the previous average rate and real GDP growth continuing to grow at its historical rate. The decoupling of real GDP growth from energy consumption growth led to a decline in energy intensity that averaged 2.8 percent per year from 1973 to 2008.16

Translation: We’re saved! We just need to double down on whatever we’ve been doing since 1973 that led to this decline in the amount of energy it took to produce GDP growth.17

However, several analysts have pointed out that the decoupling trend of the past 40 years conceals some explanatory factors that undercut any realistic expectation that energy use and economic growth can diverge much further.18

One such factor is the efficiency gained through fuel switching. Not all energy is created equal, and it’s possible to derive economic benefits from changing energy sources while still using the same amount of total energy. Often energy is measured purely by its heating value, and if one considers only this metric then a British Thermal Unit (Btu) of oil is by definition equivalent to a Btu of coal, electricity, or firewood. But for practical economic purposes, every energy source has a unique profile of advantages and disadvantages based on factors like energy density, portability, and cost of production. The relative prices we pay for natural gas, coal, oil, and electricity reflect the differing economic usefulness of these sources: a Btu of coal usually costs less than a Btu of natural gas, which is cheaper than a Btu of oil, which is cheaper than a Btu of electricity.

Electricity is a relatively expensive form of energy but it is very convenient to use (try running your computer directly on coal!). Electricity can be delivered to wall outlets in billions of rooms throughout the world, enabling consumers easily to operate a fantastic array of gadgets, from toasters and blenders to iPad chargers. With electricity, factory owners can run computerized monitoring devices that maximize the efficiency of automated assembly lines. Further, electric motors can be highly efficient at translating energy into work. Compared to other energy sources, electricity gives us more economic bang for each Btu expended (for stationary as opposed to mobile applications).

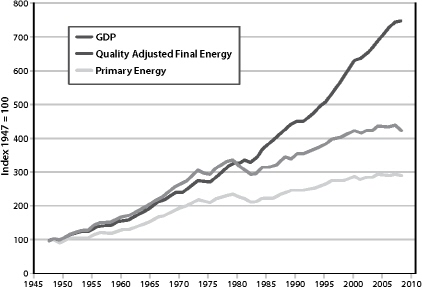

As a result of technology developments and changes in energy prices, the US and several other industrial nations have altered the ways they have used primary fuels over the past few decades. And, as several studies during this period have confirmed, once the relationship between GDP growth and energy consumption is corrected for energy quality, much of the historic evidence for energy-economy decoupling disappears.19 The Divisia index is a method for aggregating heat equivalents by their relative prices, and Figure 40 charts US GDP, Divisia-corrected energy consumption, and non-corrected energy consumption. According to Cutler Cleveland of the Center for Energy and Environmental Studies at Boston University, “This quality-corrected measure of energy use shows a much stronger connection with GDP [than non-corrected measures].

This visual observation is corroborated by econometric analysis that confirms a strong connection between energy use and GDP when energy quality is accounted for.”20

Cleveland notes that, “Declines in the energy/GDP ratio are associated with the general shift from coal to oil, gas, and primary electricity.” This holds true for many countries Cleveland and colleagues have examined.21 His conclusion is highly relevant to our discussion of Peak Oil and energy substitution: “The manner in which these improvements have been... achieved should give pause for thought. If decoupling is largely illusory, any rise in the cost of producing high quality energy vectors could have important economic impacts.... If the substitution process cannot continue, further reductions in the E/GDP ratio would slow.”22

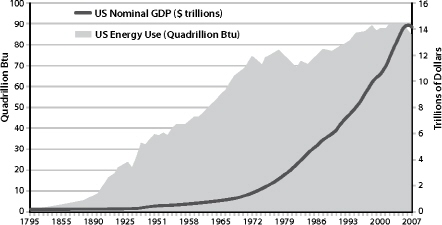

FIGURE 39. World Energy Use and GDP. Sources: US Energy Information Administration, International Monetary Fund.

Another often-ignored factor skewing the energy-GDP relationship is outsourcing of production. In the 1950s, the US was an industrial powerhouse, exporting manufactured products to the rest of the world. By the 1970s, Japan was becoming the world’s leading manufacturer of a wide array of electronic consumer goods, and in the 1990s China became the source for an even larger basket of products, ranging from building materials to children’s toys. By 2005, the US was importing a substantial proportion of its non-food consumer goods from China, running an average trade deficit with that country of close to $17 billion per month.23 In effect, China was burning its coal to make America’s consumer goods. The US derived domestic GDP growth from this commerce as Walmart sold mountains of cheap products to eager shoppers, while China expended most of the Btus. The American economy grew without using as much energy — in America — as it would have if those goods had been manufactured domestically.

FIGURE 40A. Energy Use and Economic Growth in the United States, 1795–2009.

Sources: US Energy Information Agency, US Bureau of Economic Analysis, Louis Johnston and Samuel H. Williamson, "What Was the US GDP Then?" MeasuringWorth, 2010.

FIGURE 40B. Decoupling GDP and Energy Use in the US. Changes in energy quality account for much of the divergence between energy consumption and GDP since 1980. Other factors include outsourcing of production and financialization of the economy. Sources: US Energy Information Administration and Bureau of Economic Analysis; Cutler Cleveland, Encyclopedia of Earth.

There is one more factor that helps explain historic US “decoupling” of GDP growth from growth in energy consumption — the “financialization” of the economy (discussed in Chapter 2). Cutler Cleveland notes, “A dollar’s worth of steel requires 93,000 BTU to produce in the United States; a dollar’s worth of financial services uses 9,500 BTU.”24 As the US has concentrated less on manufacturing and building infrastructure, and more on lending and investing, GDP has increased with a minimum of energy consumption growth. While the statistics seem to show that we are becoming more energy efficient as a nation, to the degree that this efficiency is based on blowing credit bubbles it doesn’t have much of a future. As we saw in Chapter 2, there are limits to debt.

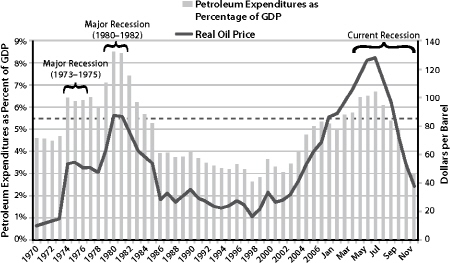

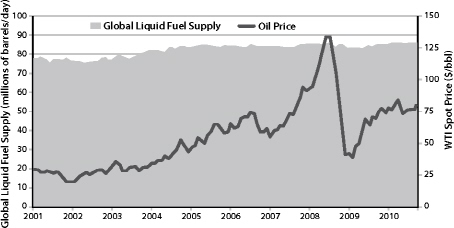

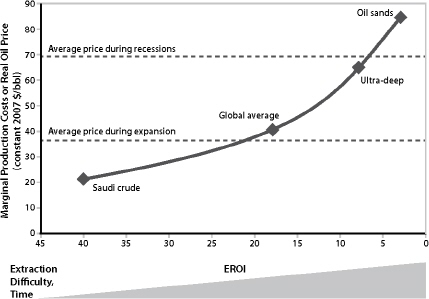

The actual tightness of the relationship between energy use and GDP is illustrated in the recent research of Charles Hall and David Murphy at the State University of New York at Syracuse, which shows that, since 1970, high oil prices have been strongly correlated with recessions, and low oil prices with economic expansion. Recession tends to hit when oil prices reach an inflation-adjusted range of $80 to $85 a barrel, or when the aggregate cost of oil for the nation equals 5.5 percent of GDP.25 If America had truly decoupled its energy use from GDP growth, then there wouldn’t be such a strong correlation, and high energy prices would be a matter of little concern.

So far we’ve been considering a certain kind of energy efficiency — energy consumed per unit of GDP. But energy efficiency is more commonly thought of more narrowly as the efficiency by which energy is transformed into work. This kind of energy efficiency can be achieved in innumerable ways and instances throughout society, and it is almost invariably a very good thing. Sometimes, however, unrealistic claims are made for our potential to use energy efficiency to boost economic growth.

FIGURE 41A. From Hall and Murphy. The dotted line represents a threshold for petroleum expenditure as a percentage of GDP. When expenditures rise above this line, the economy begins to move towards recession. Distillate fuel oil, motor gasoline, LPG, and jet fuel are all included in petroleum expenditures. Source: Adapted from David J. Murphy and Charles A. S. Hall, 2011. “Energy return on investment, peak oil, and the end of economic growth” in Ecological Economics Reviews. Robert Costanza, Karin Limburg & Ida Kubiszewski, Eds. Ann. N. Y. Acad. Sci. 1219:52–72.

FIGURE 41B. Liquid Fuel — Supply vs. Price. Source: US Energy Information Administration.

FIGURE 41C. Oil Production Costs from Various Sources as a Function of Energy Returned on Energy Invested (EROI). The dotted lines represent the real oil price averaged over both recessions and expansions during the period from 1970 through 2008. EROI data for oil sands come from Murphy and Hall, the EROI values for both Saudi Crude and ultradeep water were interpolated from other EROI data in Murphy and Hall, data on the EROI of average global oil production are from Gagnon et al., and the data on the cost of production come from Cambridge Energy Research Associates. Source: Adapted from David J. Murphy and Charles A. S. Hall. 2011. “Energy return on investment, peak oil, and the end of economic growth” in “Ecological Economics Reviews.” Robert Costanza, Karin Limburg & Ida Kubiszewski, Eds. Ann. N. Y. Acad. Sci. 1219:52–72.

Since the 1970s, Amory Lovins of Rocky Mountain Institute has been advocating doing more with less and has demonstrated ingenious and inspiring ways to boost energy efficiency. His 1998 book Factor Four argued that the US could simultaneously double its total energy efficiency and halve resource use.26 More recently, he has upped the ante with “Factor 10” — the goal of maintaining current productivity while using only ten percent of the resources.27

Lovins has advocated a “negawatt revolution,” arguing that utility customers don’t want kilowatt-hours of electricity; they want energy services — and those services can often be provided in far more efficient ways than is currently done. In 1994, Lovins and his colleagues initiated the “Hypercar” project, with the goal of designing a sleek, carbon fiber-bodied hybrid that would achieve a three- to five-fold improvement in fuel economy while delivering equal or better performance, safety, amenity, and affordability as compared with conventional cars. Some innovations resulting from Hypercar research have made their way to market, though today hybrid-engine cars still make up only a small share of vehicles sold.

While his contributions are laudable, Lovins has come under criticism for certain of his forecasts regarding what efficiency would achieve. Some of those include:

• Renewables will take huge swaths of the overall energy market (1976);

• Electricity consumption will fall (1984);

• Cellulosic ethanol will solve our oil import needs (repeatedly);

• Efficiency will lower energy consumption (repeatedly).28

The reality is that renewables have only nibbled at the overall energy market; electricity consumption has grown; cellulosic ethanol is still in the R&D phase (and faces enormous practical hurdles to becoming a primary energy source, as discussed above); and increased energy efficiency, by itself, does not appear to lower consumption due to the well-studied rebound effect, wherein efficiency tends to make energy cheaper so that people can then afford to use more of it.29

Once again: energy efficiency is a worthy goal. When we exchange old incandescent light bulbs for new LED lights that use a fraction of the electricity and last far longer, we save energy and resources — and that’s a good thing. Full stop.

At the same time, it’s important to have a realistic understanding of efficiency’s limits. Boosting energy efficiency requires investment, and investments in energy efficiency eventually reach a point of diminishing returns. Just as there are limits to resources, there are also limits to efficiency. Efficiency can save money and lead to the development of new businesses and industries. But the potential for both savings and economic development is finite.

Let’s explore further the example of energy efficiency in lighting. The transition from incandescent lighting to the use of compact fluorescents is resulting in dramatic efficiency gains. A standard incandescent bulb produces about 15 lumens per Watt, while a compact fluorescent (CFL) can yield 75 l/w — a five-fold increase in efficiency. But how much more improvement is possible? LED lights currently under development should deliver about 150 l/w, twice the current efficiency of CFLs. But the theoretical maximum efficiency for producing white light from electricity is about 300 lumens per Watt, so only another doubling of efficiency is feasible once these new LEDs are in wide use.30

Moreover, energy efficiency is likely to look very different in a resource-and growth-constrained economy from how it does in a wealthy, growing, and resource-rich economy.

Permit me to use the example of my personal experience to illustrate the point. Over the past decade, my wife Janet and I have installed photovoltaic solar panels on our suburban house, as well as a solar hot water system. In the warmer months we often use solar cookers and a solar food dryer. We insulated our house as thoroughly as was practical, given the thickness of existing exterior walls, and replaced all our windows. We built a solar greenhouse onto the south side of the house to help collect heat. And we also bought a more fuel-efficient small car, as well as bicycles and an electric scooter.

All of this took years and lots of work and money (several tens of thousands of dollars). Fortunately, during this time we had steady incomes that enabled us to afford these energy-saving measures. But it’s fair to say that we have yet to save nearly enough energy to justify our expenditures from a dollars-and-cents point of view. Do I regret any of it? No. As energy prices rise, we’ll benefit increasingly from having invested in these highly efficient support systems.

But suppose we were just starting the project today. And let’s also assume that, like millions of Americans, we were finding our household income declining now rather than growing. Rather than buying that new fuel-efficient car, we might opt for a 10-year-old Toyota Corolla or Honda Civic. If we could afford the PV and hot water solar systems at all, we would have to settle for scaled-back versions. For the most part, we would economize on energy just by cutting corners and doing without.

The way Janet and I pursued our quest for energy efficiency helped America’s GDP: We put people to work and boosted the profits of several contractors and manufacturing companies. The way people in hard times will pursue energy efficiency will do much less to boost growth — and might actually do the opposite.

What was true for Janet and me is in many ways also true for society as a whole. America could improve its transportation energy efficiency dramatically by investing in a robust electrified rail system connecting every city in the nation.31 Doing this would cost roughly $600 billion, but it would lead to dramatic reductions in oil consumption, thus lowering the US trade deficit and saving enormous amounts in fuel bills. At the same time, the US could rebuild its food system from the ground up, localizing production and eliminating fossil fuel inputs wherever possible. Doing so would increase the resilience of the system, the health of consumers, and the quality of the environment, while generating millions of employment or business opportunities.32 The cost of this food system transition is difficult to calculate accurately, but it would no doubt be substantial.

However, the nation would be getting a late start on these efforts. We could fairly easily have afforded to do these things over the past few decades when the economy was growing. But building highways and industrializing and centralizing our food system generated profits for powerful interests, and the vulnerabilities we were creating by relying increasingly on freeways and big agribusiness were only obvious to those who were actually paying attention — a small and easily overlooked demographic category in America these days. As oil becomes more scarce and expensive, more of the real costs of our reliance on cars and industrial food will become apparent, but our ability to opt for rails and local organic food systems will be constrained by lack of investment capital. We will be forced to adapt in whatever ways we can afford. Where prior investments have been made in efficient transport infrastructure and resilient food systems, people will be better off as a result.

To the degree that energy efficiency helps us adapt to a shrinking economy and more expensive energy, it will be essential to our survival and well being. The sooner we invest in efficient ways of meeting our basic needs the better, even if it entails short-term sacrifice. However, to hope that efficiency will produce a continuous reduction in energy consumption while simultaneously yielding continuous economic growth is unrealistic.

Business Development: The Cavalry’s on the Way

A remarkable book appeared in 2004 to almost no fanfare and little critical notice. The author was Mats Larsson, a Swedish business consultant, and his book was titled The Limits of Business Development and Economic Growth.33 Unlike the thousands of business books published each year that promise to help managers become more effective, or that hint at new opportunities for profit, Larsson’s conveyed a sobering message — one that the business community evidently didn’t want to hear: Our human ability to invent genuinely new activities is probably limited, and most recent inventions have consisted merely of finding ways to speed up activities that humans have been performing for a very long time — communicating, transporting themselves and their goods, trading, and manufacturing. These processes can only be taken to the limits where things can be done at almost no time and at a very low cost, and we are fast approaching those limits.

“Through centuries and millennia,” Larsson writes, “humans have struggled to simplify production and make tools and products less expensive and easier to manufacture.” Possible examples are legion from virtually every industry — from telecommunications to air travel. “Now we are finally in a situation where many things can be done in close to no time and at a very low cost.”34 He goes on:

[A]t close scrutiny we do not seem to have done anything except gradually automate activities that human beings have been performing for a few hundred, and sometimes thousand, years already. The development of a large number of different technologies that help us to automate these tasks has driven economic development and business proliferation in the past. Now, technological progress is at the stage where a number of these technologies and products have been developed to a point where we cannot realistically expect them to develop much further. And, despite widespread belief of the opposite, we cannot be certain that there are enough new products or technologies left to be developed for companies to be able to make use of the resources that are going to be freed from existing industries.35

For the skeptical reader such sweeping statements bring to mind the reputed pronouncement by IBM former president Tom Watson in 1943, “I think there is a world market for maybe five computers.” Fortunes continue to be made from new products and business ideas like the iPad, Facebook, 3D television, BluRay DVD, cloud computing, biotech, and nanotech; soon we’ll have computer-controlled 3D printing. However, Larsson would argue that these are in most cases essentially extensions of existing products and processes. He explicitly cautions that he is not saying that further improvements in technology and business are no longer possible — rather that, taken together, they will tend to yield diminishing returns for the economy as a whole as compared to innovations and improvements years or decades ago.

Fundamentally new technologies, products, and trends in business (as opposed to minor tweaks in existing ones) tend to develop at a slow pace. “Many of the big, resource-consuming trends of the near past are soon coming to an end in terms of their ability to attract investment and cover the cost of resources for development, production, and implementation.”

Back in the late 1990s business was buzzing with talk of a “new economy” based on e-commerce. Internet start-up companies attracted enormous amounts of investment capital and experienced rapid growth. But while e-commerce flourished, many expectations about profit opportunities and rates of growth proved unrealistic.

Automation has reached the point where most businesses need dramatically fewer employees. “Presumably, this should make companies more profitable and increase their willingness to invest in new products and services,” writes Larsson. “It does not. Instead, there is competition between more and more equal competitors, and all are forced to reduce prices to get their goods sold. The advantages of leading companies are getting smaller and smaller and it is becoming ever more difficult to find areas where unique advantages can be developed.”36 Regardless of the industry, “Most companies tend to use the same standard systems and more and more companies arrive at a situation where time and cost have been reduced to a minimum.”37

It is bitterly ironic that so much success could lead to an ultimate failure to find further paths toward innovation and earnings.

Larsson estimated in 2004 that impediments to business development would begin to appear in the decade 2005–2015. His analysis did not take into account limits to the world’s supplies of fossil fuels, nor declines worldwide in the amount of energy returned on efforts spent in obtaining energy. Neither did he examine limits to debt or declining ore quality for minerals essential to industry.38 Remarkably, though, his forecast — based entirely on trends within business — points to an expiration date for global growth that coincides with forecasts based on credit and resource limits. This convergence of trends may not be merely coincidental: after all, automation is fed by cheap energy, and business growth by debt. Limits in one area tighten further the restrictions in others.

BOX 4.2 How Much More Improvement Is Possible — Or Needed?

A couple of centuries ago, if two people in distant cities wished to communicate, one of them traveled by foot, horse cart, or boat (or sent a messenger). It may have taken weeks. Now, using a cell phone, we can communicate almost instantly. A scholar might have had to travel to a distant library to access a particular piece of information. Today we can access all kinds of information via the Internet at almost no cost in almost no time. An ancient Sumerian would have used a clay tablet and wooden stylus for accounting. Now we use computers with business software to keep track of vastly more numerous transactions automatically in almost no time and at almost no cost.

While we are never likely to reach zero in terms of time and cost, we can be certain that the closer we get to zero time and cost, the higher the cost of the next improvement and the lower the value of the next improvement will be. This means that, with regard to each basic human technologically mediated pursuit (communication, transportation, accounting, and so on) we will sooner or later reach a point where the cost of the next improvement will be higher than its value.

We may be able to further improve the functionality of the Microsoft Office software package, the speed of transactions on the computer, computer storage capacity, or the number of sites available on the Internet. Yet on many of these development trajectories we will face a point when the value of yet another improvement will be lower than its cost to the consumer. At this point, further product “improvements” will be driven almost solely by aesthetic considerations identified by advertisers and marketers rather than by improvements achieved by engineers or inventors. For many consumer products this stage was reached decades ago.39

Moore’s or Murphy’s Law?

It is a truism in most people’s minds that the most important driver of economic growth is new technology. Important innovations, from the railroad and the telegraph up through the satellite and the cell phone, have generated fortunes while creating markets and jobs. It may seem downright cynical to suggest that we won’t see more of the same, leading to an abundant, technotopian future in which humanity has colonized space and all our needs are taken care of by obedient robots. But once again, there may be limits.

The idea that technology will continue to improve dramatically is often supported by reference to Moore’s law. Over the past three decades, the number of transistors that can be placed inexpensively on an integrated circuit has doubled approximately every two years. Computer processing speed, memory capacity, and the number of pixels per dollar in digital cameras have all followed the same trajectory. This “law” is actually better thought of as a trend — but it is a trend that has continued for over a generation and is not expected to stop until 2015 or later. According to technology boosters, if the same innovative acumen that has led to Moore’s law were applied to solving our energy, water, climate, and food problems, those problems would disappear in short order.

My first computer was an early laptop, circa 1986. It cost $1600 and had no hard disk — just two floppy drives — plus a small non-backlit, black-and-white LCD screen. It boasted 640K RAM internal memory and a processing speed of 9.54 MH. I thought it was wonderful! Its capabilities dwarfed those of the Moon-landing Apollo 11 spacecraft on-board computer, developed by NASA over a decade earlier.

My most recent laptop cost $1200 (that’s a lot to pay these days, but it’s a deluxe model), has a 250 gigabyte hard drive (holding about 200,000 times as much data as you could cram onto an old 3.5 inch floppy disk), 4 Gigs of internal memory, and a processing speed of 2.4 gigahertz. Its color LCD screen is stunning, and it does all sorts of things I could never have dreamed of doing with my first computer: it has a built-in camera so I can take still or moving pictures, it has sound, it plays movies — and, of course, it connects to the Internet! Many of those features are now standard even on machines selling for $300.

From 1986 to today, in just 25 years, the typical consumer-grade personal computer has increased in performance thousands of times over while dropping in price — noticeably so if inflation is factored in.40

So why hasn’t the same thing happened with energy, transportation, and food production during this period? If it had, by now a new car would cost $750 and get 2000 miles to the gallon. But of course that’s not the case. Is the problem simply that engineers in non-computer industries are lazy?

Of course not. It’s because microprocessors are a special case. Moving electrons takes a lot less energy than moving tons of steel or grain. Making a two-ton automobile requires a heap of resources, no matter how you arrange and rearrange them. In many of the technologies that are critically important in our lives, recent decades have seen only minor improvements — and many or most of those have come about through the application of computer technology.41

Take the field of ground transportation (the example I’m about to use is also relevant to the energy efficiency and substitution discussions earlier in this chapter). We could make getting to and from stores and offices far more efficient by installing personal rapid transit (PRT) systems in every city in the world. PRT consists of small, automated vehicles operating on a network of specially built guide-ways (a pilot system has been built at Heathrow airport in London). The energy-per-passenger-mile efficiency of PRT promises to be much greater than that for personal automobiles, even electric ones, and greater even than for trolleys, streetcars, buses, subways, and other widely deployed forms of public transit. According to some estimates, a PRT system should attain an energy efficiency of 839 Btu per passenger mile (0.55 MJ per passenger km), as compared to the 3,496 Btu per passenger mile average for automobiles and 4,329 Btu for personal trucks.42

By the time we have shifted all local human transport to PRT, we may be approaching the limits of what is possible to achieve in terms of motorized, relatively high-speed transport energy efficiency. But to do this we will need massive investment, policy support, and the development of consumer demand. PRT may be an excellent idea, but its implementation is moving at a glacial pace — there’s nothing “rapid” about it.

Far from already having implemented the most efficient transit systems imaginable, we find ourselves today even more dependent on cars and trucks than we were a half-century ago. Moreover, the typical automobile of 2011 is essentially similar to one from 1960: both are mostly made from steel, glass, aluminum, and rubber; both run on gasoline; both have similar basic parts (engine, transmission, gas tank, wheels, seats, body panels, etc.). Granted, today’s car is more energy-efficient and sophisticated — largely because of the incorporation of computerized controls over its various systems. Much the same could be said for modern aircraft, as well as for the electricity grid system, water treatment and delivery systems, farming operations, and heating and cooling systems. Each of these is essentially a computer-assisted, somewhat more efficient version of what was already common two generations ago.

True, the field of home entertainment has seen some amazing technical advances over the past five decades — digital audio and video; the use of lasers to read from and record on CDs and DVDs; flat-screen, HD, and now 3D television; and the move from physical recorded media to distribution of MP3 and other digital recording formats over the Internet. Yet when it comes to how we get our food, water, and power, and how we transport ourselves and our goods, relatively little has changed in any truly fundamental way.

The nearly miraculous developments in semiconductor technologies that have revolutionized computing, communications, and home entertainment during the past few decades have led us to think we’re making much more “progress” than we really are, and that more potential for development in some fields exists than really does. The slowest-moving areas of technology are, understandably, the ones that involve massive infrastructure that is expensive to build and replace. But these are the technologies on which the functioning of our civilization depends.

In fact, rather than showing evidence of great technological advance, our basic energy, water, and transport infrastructure shows signs of senescence, and of vulnerability to Murphy’s law — the maxim that anything that can go wrong, will go wrong. In city after city, water and sewer pipes are aging and need replacement. The same is true of our electricity grids, natural gas pipes, roads, bridges, dams, airport runways, and railroads.

I live in Sonoma County, California, where officials declared last year that 90 percent of county roads will be allowed to deteriorate and gradually return to gravel, simply because there’s no money in the budget to pay for continued repairs. Perhaps someone who lives on one of these Sonoma County roads will mail-order the latest MacBook Air (a shining aluminum-clad example of Moore’s law) for delivery by UPS — only to be disappointed by the long wait because a delivery truck has broken its axle in a pothole (a dusty example of Murphy’s law).

According to Ken Kirk, executive director of the National Association of Clean Water Agencies, more than 1,000 aging water and sewer systems around the US need urgent upgrades.43 “Urgent” in this instance means that if infrastructure projects aren’t undertaken now, the ability of many cities to supply drinking water in the years ahead will be threatened. The cost of renovating all these systems is likely to amount to between $500 billion and $1 trillion.

The failure of innovation and new investment to keep up with the decay of existing infrastructure is exemplified also in the fact that the world’s global positioning system (GPS) is headed for disaster. Last year, the US Government Accountability Office (GAO) published a report noting that GPS satellites are wearing down and, if no new investments are made, the accuracy of the positioning system will gradually decline.44 At some point during the next few decades, the whole system may crash. The GPS system happens to be one of the glowing highlights of recent technological progress. We depend on it not just for piloting Lincoln Navigators across the suburbs, but for guiding tractors through giant cornfields; for mapping, construction, and surveying; for scientific research; for moving troops in battle; and for dispatching emergency response vehicles to their appointed emergencies. How could we have allowed such an important piece of infrastructure to become so vulnerable?

There is one more reason to be skeptical about the capability of technological innovation across a broad range of fields to maintain economic growth, and though I have saved it to the end it is by no means a minor point. As verified in the research of the late Professor Vernon W. –Ruttan of the University of Minnesota in his book Is War Necessary for Economic Growth?: Military Procurement and Technology Development, many large-scale technological developments of the past century depended on government support during early stages of research and development (computers, satellites, the Internet) or build-out of infrastructure (highways, airports, and railroads).45 Ruttan studied six important technologies (the American mass production system, the airplane, space exploration and satellites, computer technology, the Internet, and nuclear power) and found that strategic, large-scale, military-related investments across decades on the part of government significantly helped speed up their development. Rut-tan concluded that nuclear technology could not have been developed at all in the absence of large-scale and long-term government investments.

If, in the years ahead, government remains hamstrung by overwhelming levels of debt and declining tax revenues, investment that might lead to major technological innovation and infrastructure build-out is likely to be highly constrained. Which is to say, it probably won’t happen — absent a wartime mobilization of virtually the entire economy.

We’re counting on Moore’s law while setting the stage for Murphy’s.

Specialization and Globalization: Genies at Our Command

Economic efficiency doesn’t flow from energy efficiency alone; it can also be achieved by increasing specialization or by expanding the scope of trade so as to exploit cheaper resources or labor. Both of these strategies have deep and ancient roots in human history.46

Division of labor increases economic efficiency by optimizing the use of people’s unique talents, proclivities, and skills. If all people had to grow or gather all of their own food and fuel, the effort might require most of their working hours. By leaving food production to skilled farmers, we enable others to spend their days weaving cloth, playing the oboe, or screening hand-carried luggage at airports.47 Prior to the agricultural revolution several millennia ago, division of labor was mostly along gender lines, and was otherwise part-time and informal; with farming and the settling of the first towns and cities, full-time division of labor appeared, along with social classes. Since the Industrial Revolution, the number of full-time occupations has soared.

If economists often underestimate the contribution of energy to economic growth, it would be just as wrong to disregard the role of specialization. Adam Smith, who was writing when Britain was still burning relatively trivial amounts of coal, believed that economic expansion would come about entirely because of division of labor. His paradigm of progress was the pin-making factory:

I have seen a small manufactory of this kind where ten men only were employed.... But though they were very poor, and therefore but indifferently accommodated with the necessary machinery, they could, when they exerted themselves, make among them about twelve pounds of pins in a day. There are in a pound upwards of four thousand pins of a middling size. Those ten persons, therefore, could make among them upwards of forty-eight thousand pins in a day. Each person, therefore, making a tenth part of forty-eight thousand pins, might be considered as making four thousand eight hundred pins in a day. But if they had all wrought separately and independently, and without any of them having been educated to this peculiar business, they certainly could not each of them have made twenty, perhaps not one pin in a day....48

Later in The Wealth of Nations, Smith criticizes the division of labor, saying it leads to a “mental mutilation” in workers as they become ignorant and insular — so it’s hard to know whether he thought the trend toward specialization was good or just inevitable. It’s important to note, however, that it was under way before the fossil fuel revolution, and was already contributing to economic growth.

Standard economic theory tells us that trade is good. If one town has apple orchards but no wheat fields, while another town has wheat but no apples, trade can make everyone’s diet more interesting. Enlarging the scope of trade can also reduce costs, if resources or products are scarce and expensive in one place but abundant and cheap elsewhere, or if people in one place are willing to accept less payment for their work than in another.

As mentioned in Chapter 1, trade has a long history and a somewhat controversial one, given that empires typically used military power to enforce trade rules that kept peripheral societies in a condition of relative poverty and dependency. The worldwide colonial efforts of European powers from the late 15th century through the mid-20th century exemplify this pattern; since then, enlargement of the scope of trade has assumed a somewhat different character and is now referred to as globalization.

Long-distance trade expanded dramatically from the 1980s onward as a result of the widespread use of cargo container ships, the development of satellite communications, and the application of computer technology. New international agreements and institutions (WTO, NAFTA, CAFTA, etc.) also helped speed up and broaden trade, maximizing efficiencies at every stage.

It may be that people were happier before trade, when they were embedded in more of a gift economy. But the material affluence of the world’s wealthy nations simply cannot be sustained without trade at current levels. Indeed, without expanding trade, the world economy cannot grow. Period.49

Most economists regard division of labor and globalization as strategies that can continue to be expanded far into the future. To think otherwise would be to question the possibility of endless economic growth. But there are reasons to question this belief.

There may not be much more to achieve through specialization in the already-industrialized countries, as most tasks that can possibly be professionalized, commercialized, segmented, and apportioned already have been. Moreover, in a world of declining energy availability, the trend toward specialization could begin to be reversed. Specialization goes hand-in-hand with urbanization, and in recent decades urbanization has depended on surplus agricultural production from the industrialization of agriculture, which in turn depends on cheap oil.50

Relegating all food production to full-time farmers leaves more time for others to do different kinds of work. But in non-industrial agricultural societies, the class of farmers includes most of society. The specialist classes (soldiers, priests, merchants, scribes, and managers) are relatively small. It was only with the application of fossil fuels (and other strategies of intensification) to agriculture that we achieved a situation where, as in the US today, a mere two percent of the population grows nearly all the domestically produced food, freeing the other 98 percent to work at a dizzying variety of other jobs.

In other words, most of the specialization that has occurred since the beginning of the Industrial Revolution depended upon the availability of cheap energy. With cheap energy, it makes sense to replace human muscle-powered labor with the “labor” of fuel-fed machines, and it is possible to invent an enormous number of different kinds of machines to do different tasks. Tending and operating those machines requires specialized skills, so more mechanization tends to lead to more specialization.

But take away cheap energy and it becomes more cost-effective to do a growing number of tasks locally and with muscle power once again. As energy gets increasingly expensive, a countertrend is therefore likely to emerge: generalization. Like our ancestors of a century ago or more, most of us will need the kinds of knowledge and skill that can be adapted to a wide range of practical tasks.

Globalization will suffer a similar fate, as it is vulnerable not only to high fuel prices, but grid breakdowns, political instability, credit and currency problems, and the loss of satellite communications.

Jeff Rubin, the former chief economist at Canadian Imperial Bank of Commerce World Market, is the author of Why Your World Is About To Get a Whole Lot Smaller: Oil and the End of Globalization, which argues that the amounts of food and other goods imported from abroad will inevitably shrink, while long-distance driving will become a luxury and international travel rare.51 Given time, he says, we could develop advanced sailing ships with which to resume overseas trade. But even then moving goods across land will require energy, so if we have less energy that will almost certainly translate to less mobility.

The near future will be a time that, in its physical limits, may resemble the distant past. “The very same economic forces that gutted our manufacturing sector,” says Rubin, “that paved over our farm land, when oil was cheap and abundant, and transport costs were incidental, those same economic forces will do the opposite in a world of triple digit oil prices. And that is not determined by government, and that is not determined by ideological preference, and that is not determined by our willingness or unwillingness to reduce our carbon trail. That is just Economics 100. Triple digit oil price is going to change cost-curves. And when it changes cost-curves, it is going to change economic geography at the same time.”52

Despite their economic advantages, specialization and globalization in some ways reduce resilience — a quality that is essential to our adapting to the end of growth. Extremely specialized workers may have difficulty accommodating themselves to the economic necessities of the post-growth world. In World War II, auto assembly plants in the US could be quickly re-purposed to produce tanks and planes for the war effort; today, when we need auto factories to make electric railroad locomotives and freight/ passenger cars, the transition will be much more difficult because machines as well as workers are much more narrowly specialized. Moreover, dependence on global systems of trade and transport will leave many communities vulnerable if needed tools, products, materials, and spare parts are no longer available or are increasingly expensive due to rising transport costs. Successful adaptation will require economic re-localization–and a generalist attitude toward problem solving.

Whether we like or hate globalization and specialization, we will nevertheless have to bend to the needs of an energy-constrained economy — and that will mean relying more on local resources and production capacity, and being able to do a broader range of tasks.

Of course, this is not to say that all activities will be localized, that trade will disappear, or that there will be no specialization. The point is simply that the recent extremes achieved in the trends toward specialization and globalization cannot be sustained and will be reversed. How far we will go toward being local generalists depends on how we handle the energy transition of the 21st century — or, in other words, how much of technological civilization we can preserve and adapt.

The near-religious belief that economic growth depends not on energy and resources, but solely on increasing innovation, efficiency, trade, and division of labor, can sometimes lead economists to say silly things.

Some of the silliest and most extreme statements along these lines are to be found in the writings of the late Julian Simon, a longtime business professor at the University of Illinois at Urbana-Champaign and Senior Fellow at the Cato Institute. In his 1981 book The Ultimate Resource, Simon declared that natural resources are effectively infinite and that the process of resource substitution can go on forever. There can never be overpopulation, he declared, because having more people just means having more problem-solvers.

How can resources be infinite on a small planet such as ours? Easy, said Simon. Just as there are infinitely many points on a one-inch line segment, so too there are infinitely many lines of division separating copper from non-copper, or oil from non-oil, or coal from non-coal in the Earth. Therefore, we cannot reliably quantify how much copper, oil, coal, or neodymium or gold there really is in the world. If we can’t measure how much we have of these materials, that means the amounts are not finite — thus they are infinite.53

It’s a logical fallacy so blindingly obvious that you’d think not a single vaguely intelligent reader would have let him get away with it. Clearly, an infinite number of dividing lines between copper and non-copper is not the same as an infinite quantity of copper. While a few critics pointed this out (notably Herman Daly), Simon’s book was widely praised nevertheless.54 Why? Because Simon was saying something that many people wanted to believe.

Simon himself is gone, but his way of thinking is alive and well in the works of Bjorn Lomborg, author of the bestselling book The Skeptical Environmentalist and star of the recent documentary film “Cool It.”55 Lomborg insists that the free market is making the environment ever healthier, and will solve all our problems if we just stop scaring ourselves needlessly about running out of resources.

It’s a convenient “truth” — a message that’s appealing not only because it’s optimistic, but because it confirms a widespread, implicit belief that technology is equivalent to magic and can do anything we wish it to. Economists often talk about the magic of exponential growth of compound interest; with financial magic we can finance new technological magic. But in the real world there are limits to both kinds of “magic.” Modern industrial technology has certainly accomplished miracles, but we tend to ignore the fact that it is, for the most part, merely a clever set of means for using a temporary abundance of cheap fossil energy to speed up and economize things we had already been doing for a very long time.

Many readers will say it’s absurd to assert that technology is subject to inherent limits. They may recall an urban legend according to which the head of the US Patent Office in 1899 said that the office should be closed because everything that could be invented already had been invented (there’s no evidence he actually did say this, by the way).56 Aren’t claims about limits to substitution, efficiency, and business development similarly wrong-headed now?

Not necessarily. Humans have always had to face social as well as resource limits. While the long arc of progress has carried us from knives of stone to Predator drones, there have been many reversals along the way. Civilizations advance human knowledge and technical ability, but they also tend to generate levels of complexity they cannot support beyond a certain point. When that point is reached, civilizations decline or collapse.57

I am certainly not saying that we humans won’t continue to invent more new kinds of tools and processes. We are a cunning breed, and invention is one of our species’ most effective survival strategies. However, the kinds of inventions we came up with in the 19th and 20th centuries were suited to human needs and interests in a world where energy and materials were cheap and amounts available were quickly expanding. Inventions of the 21st century will be ones suited to a world of expensive, declining energy and materials.